Chinese AI start-up DeepSeek opened in 2026 by publishing a technical paper co-authored by founder Liang Wenfeng, proposing a rethink of foundational AI model training (01/01). The paper signals the company’s effort to develop cost-effective large AI models while keeping pace with better-funded US competitors.

For industry observers, DeepSeek’s papers often provide early insight into the engineering choices behind the start-up’s next major model release.

Manifold-Constrained Hyper-Connections (mHC) Introduced

The paper presents Manifold-Constrained Hyper-Connections (mHC), a new method aimed at improving model training efficiency. DeepSeek researchers tested mHC on models with 3 billion, 9 billion, and 27 billion parameters and found it scaled effectively without adding significant computational load.

“Empirical results confirm that mHC effectively … [enables] stable large-scale training with superior scalability compared with conventional HC (hyper-connections),” wrote the research team led by Zhenda Xie, Yixuan Wei, and Huanqi Cao, with Liang Wenfeng listed as the final author.

Cost-Effective Scaling Through Infrastructure Optimisations

The researchers noted that mHC achieves these results through “efficient infrastructure-level optimisations,” delivering gains with “negligible computational overhead.” The paper also demonstrates Liang’s continued involvement in core research, despite his low public profile.

Building on ResNet and Previous Hyper-Connections

mHC is based on hyper-connections, first proposed by ByteDance in 2024 as a modification of ResNet architectures. ResNet, developed by Microsoft Research Asia scientists including He Kaiming, stabilizes deep neural networks by preserving residual information across layers.

ByteDance’s HC method expanded residual streams to enhance network complexity without increasing computational overhead. DeepSeek researchers argued that it did not fully address rising memory costs, limiting practical scalability for large-model training. mHC adds a manifold constraint to improve both computational and cost efficiency.

“mHC will help address current limitations and potentially illuminate new pathways for the evolution of next-generation foundational architectures,” the researchers stated.

Paper Publication and Industry Expectations

DeepSeek uploaded the paper to the open-access repository arXiv, continuing its practice of sharing major technical research publicly, as with previous R1 and V3 model papers. Industry experts, including Florian Brand of Trier University, said DeepSeek’s papers often signal the technical direction of its next-generation models.

Expectations are high that DeepSeek could release its next major model ahead of the Spring Festival in mid-February, following last year’s pattern with the R1 model.

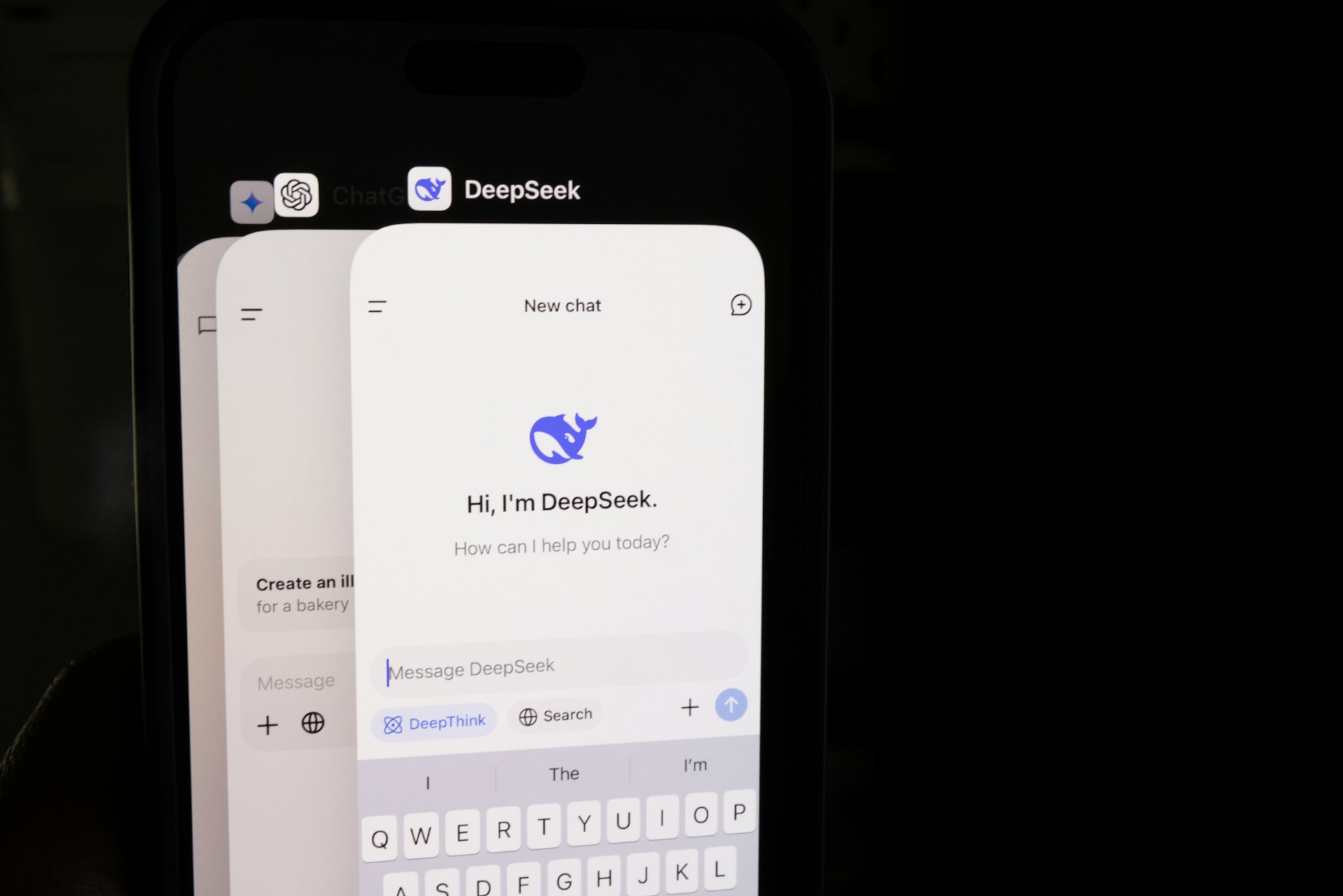

PHOTO: UNSPLASH

This article was created with AI assistance.

Read More

Saturday, 28-02-26

Saturday, 28-02-26