In the rapidly evolving world of artificial intelligence, infrastructure matters. On December 2, 2025, Amazon Web Services (AWS) unveiled its much-anticipated AI training chip, Trainium3. This launch signals a bold push by AWS to challenge established players like NVIDIA and redefine how organizations build, train, and deploy large-scale AI models. With impressive performance metrics, aggressive pricing claims, and enhanced energy efficiency, Trainium3 stands out as a compelling alternative, but does it truly live up to the hype? This article explores what Trainium3 offers, why it matters, and how it could reshape the AI hardware landscape.

What Is Trainium3 and What Are Its Key Specs

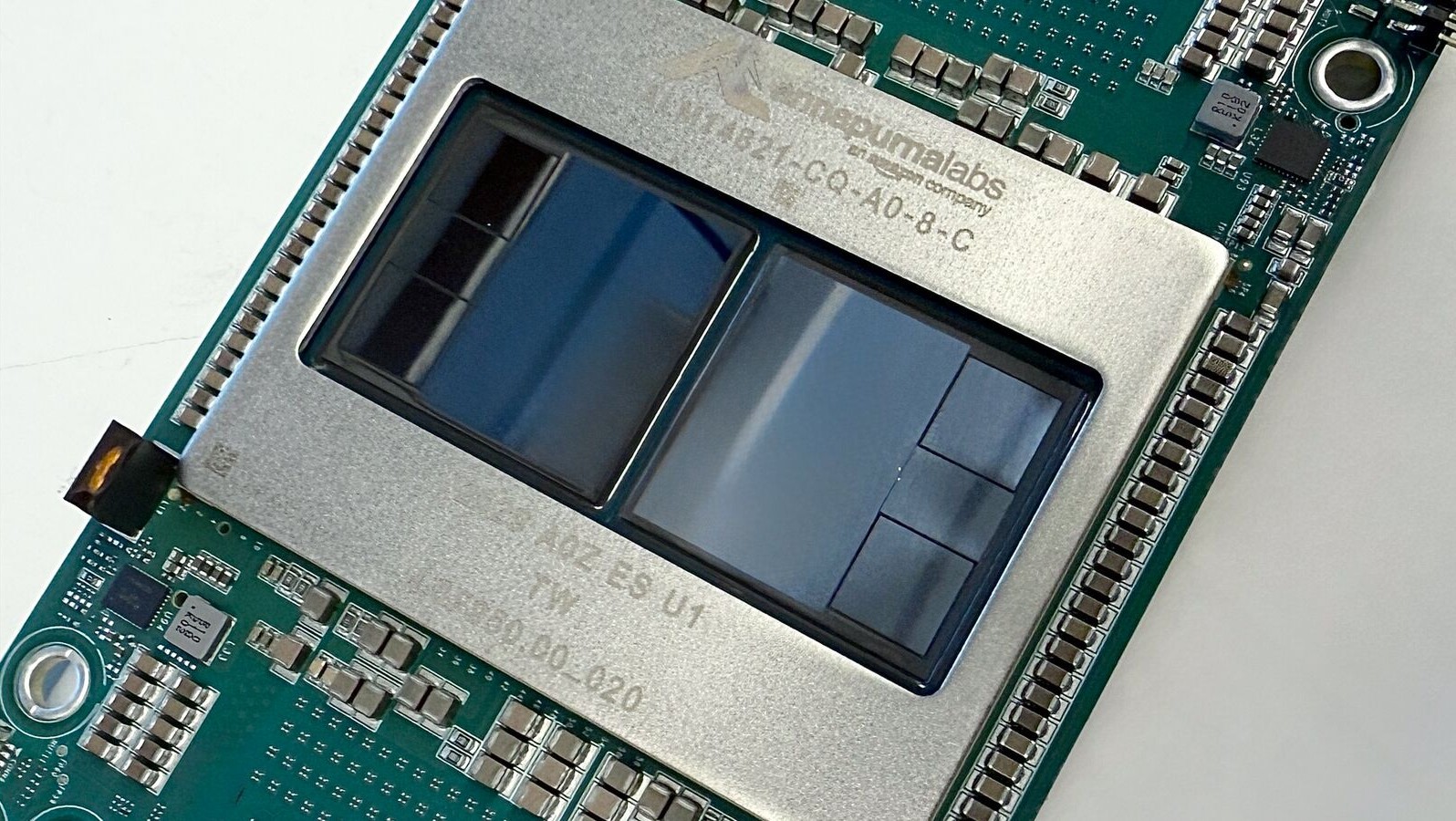

Trainium3 is AWS’s third-generation AI training chip, built on a cutting-edge 3-nanometer (nm) process. The chip is not sold directly to consumers; rather, AWS offers server instances — Amazon EC2 “Trn3 UltraServers” — that integrate Trainium3 for large-scale AI training and inference.

Here are some of the headline specs that make Trainium3 noteworthy:

- Each Trainium3 chip delivers 2.52 PFLOPs of FP8 compute power.

- Every chip includes 144 GB of HBM3e memory and memory bandwidth up to 4.9 TB/s — 1.5× more memory capacity and 1.7× bandwidth compared to the previous generation, Trainium2.

- In a server configuration, AWS can deploy up to 144 Trainium3 chips per UltraServer, yielding 362 FP8 PFLOPs aggregate compute, and up to ~20.7 TB HBM3e memory with memory bandwidth reaching ~706 TB/s.

- Compared to Trainium2 UltraServers, Trainium3-based servers deliver up to 4.4× more compute performance, nearly 4× more memory bandwidth, and 4× better energy efficiency.

- Network and interconnect improvements: a new fabric called NeuronSwitch-v1 doubles internal bandwidth for faster chip-to-chip communication, reducing latency below 10 microseconds.

These specs position Trainium3 as a top-tier AI accelerator, designed to handle not just conventional AI workloads, but also cutting-edge demands such as multimodal models, reasoning tasks, real-time video generation, and large-scale inference with high concurrency.

Why Trainium3 Matters — Performance, Cost, and Cloud Strategy

Superior Performance and Efficiency

The performance boost from Trainium2 to Trainium3 is substantial. For many standard workloads, AWS claims customers can expect roughly 3× higher throughput per chip, alongside 4× faster response times compared to the previous generation.

This performance jump is accompanied by much improved energy efficiency. By delivering more performance per watt (4× better than Trainium2), Trainium3 helps reduce operational power consumption significantly. For data centers and organizations that run intensive training jobs such as large language models, multimodal AI, video generation, this translates directly into lower energy bills and more sustainable compute infrastructure.

Cost Advantage Compared to GPUs

One of the main motivations behind AWS building its own AI silicon is cost efficiency. According to AWS and media reports, using Trainium3 can result in substantial savings on training and inference costs compared to high-end GPUs.

For enterprise customers, this cost advantage could make AI development more accessible — reducing the barrier to entry for organizations that previously couldn’t afford to maintain large GPU clusters. This is especially important as models grow larger and compute demands increase.

Strategic Independence and Cloud Differentiation

By building and deploying its own custom AI chips, AWS is reducing its dependency on third-party vendors like NVIDIA. This gives AWS more control over performance tuning, supply chain, pricing, and infrastructure evolution. The availability of Trainium3-based UltraServers marks a critical step in that direction.

Moreover, because AWS offers these chips in cloud instances, customers don’t need to manage hardware; they can simply provision infrastructure as needed. This lowers the barrier for enterprises to adopt powerful AI infrastructure without directly investing in data center hardware.

With AWS’s global footprint, Trainium3 could shift the competitive dynamics in AI cloud infrastructure and potentially erode some of NVIDIA’s dominance.

Use Cases Best Suited for Trainium3

Trainium3 isn’t just about horsepower — it’s also about enabling use cases previously too costly or inefficient.

- Training Large Language Models (LLMs) or Multimodal Models

- As model sizes grow, compute, memory, and bandwidth demands increase dramatically — making Trainium3's architecture well-suited for advanced AI development.

- Real-time Inference

- Improved throughput and reduced latency support applications like chatbots, text/image/video generation, or any large-scale AI service needing speed and capacity.

- Video Generation and Multimodal AI

- For workloads requiring heavy parallel processing and multimodal input-output, Trainium3’s high memory and bandwidth offer significant advantages.

- Cost-Constrained Enterprises and Startups

- Trainium3’s cloud-based offering enables organizations with limited budgets to scale AI workloads affordably.

- Sustainable and Energy-Efficient AI Projects

- With improved power efficiency, Trainium3 appeals to organizations prioritizing environmental impact or reducing long-term operating costs.

What Are the Challenges and What We Don’t Yet Know

Even with its strengths, Trainium3 faces several challenges.

- Ecosystem Lock-In and Software Compatibility

- Although AWS provides the Neuron SDK and integrates with multiple AI frameworks, not every library may be optimized yet.

- Entrenched GPU Ecosystem

- NVIDIA’s dominance and extensive software ecosystem pose a significant barrier to migration.

- Real-World Performance Validation

- While performance claims are impressive, results will vary depending on workload type and optimization.

- Hybrid Environments

- Organizations that mix Trainium and GPU servers may face challenges in interoperability.

What Trainium3 Means for the Future of AI Infrastructure

The launch of Trainium3 represents a broader shift in AI infrastructure strategy — toward highly efficient, cloud-deployed, custom silicon for advanced AI workloads.

Key implications include:

- More democratized AI development, enabling startups to access powerful compute on demand

- Competitive pressure on GPU vendors, prompting faster innovation

- Faster AI research cycles, due to more accessible compute

- Greater sustainability, driven by improved energy efficiency

Trainium3’s success, however, depends heavily on adoption, software ecosystem maturity, and real-world workload performance.

Conclusion

Trainium3 marks a major milestone for AWS and could be a game-changer in the AI infrastructure landscape. With state-of-the-art specs and significant improvements in performance and efficiency, Trainium3 UltraServers offer a strong value proposition for companies training or deploying large-scale AI models.

Its launch could challenge GPU dominance, democratize AI infrastructure, and accelerate AI innovation globally. However, its ultimate impact will depend on how quickly enterprises adopt the new architecture and how effectively AWS continues improving the supporting ecosystem.

Read More

Thursday, 22-01-26

Thursday, 22-01-26