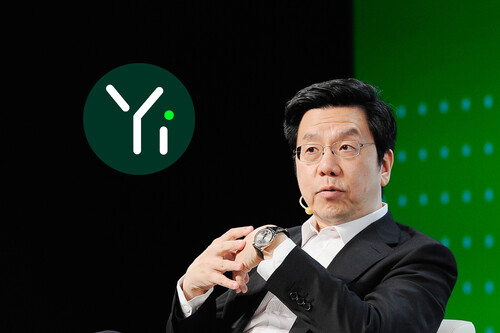

01.ai, a Chinese artificial intelligence company, has made waves in the AI industry by training one of its advanced AI models with just 2,000 GPUs and a relatively modest budget of $3 million. This feat stands in stark contrast to competitors like OpenAI, which reportedly spent between $80 million and $100 million to train its GPT-4 model. According to Kai-Fu Lee, the founder and CEO of 01.ai, this achievement underscores the company's ability to innovate and maximize limited resources, a necessity given the restrictions Chinese companies face due to U.S. export regulations on high-performance GPUs.

Lee’s revelation has stunned many in the tech world, especially those in Silicon Valley. He highlighted the cost efficiency of 01.AI’s approach, noting that despite having access to fewer GPUs and significantly smaller budgets compared to American counterparts, the company was still able to produce a model that competes at the highest levels. For context, OpenAI’s GPT-3 required over 10,000 Nvidia A100 GPUs, and subsequent versions like GPT-4 and GPT-5 were trained with even larger computational resources.

Despite these limitations, 01.ai has proven that top-tier AI capabilities can be achieved without vast financial resources. Lee emphasized that the key to this success lies not only in efficient use of hardware but also in the company’s meticulous engineering and optimization techniques. One major focus has been enhancing the inference process, a critical aspect of AI performance. To do this, 01.ai has implemented a multi-layer caching system and built a specialized inference engine that prioritizes memory management over raw computation. This has enabled the company to significantly lower its inference costs—down to just 10 cents per million tokens, roughly 30 times cheaper than comparable models.

01.ai's model, Yi-Lightning, currently ranks sixth in model performance according to the LMSIS measurement at UC Berkeley. Although the company did not disclose the exact GPU types used, it's believed that the 2,000 GPUs utilized were much less expensive than the Nvidia H100, which costs around $30,000 per unit. The low inference cost and efficient design allow Yi-Lightning to process data at a fraction of the typical expense of other AI systems.

Given the unique challenges faced by Chinese companies in the AI space—most notably limited access to cutting-edge GPUs due to the U.S. export bans—01.ai's success illustrates the importance of resourcefulness and creative problem-solving in the rapidly evolving field of AI. By focusing on minimizing bottlenecks in the training and inference processes, the company has set a new benchmark for cost-effective AI development.

Lee also touched on broader issues affecting the Chinese AI sector, such as valuation disadvantages and limited access to investment compared to U.S. tech giants. Despite these hurdles, 01.ai’s performance proves that innovative engineering and strategic resource management can level the playing field, even in a highly competitive and resource-intensive industry.

As AI continues to play an ever-greater role in global business and technological advancement, 01.ai's approach may inspire other companies—both in China and beyond—to rethink how they allocate resources and optimize performance. With the AI market growing at an exponential rate, cost-effective strategies like the one demonstrated by 01.ai may become increasingly important for companies aiming to compete in the global arena.

TOMHARDWARE

Read More

Friday, 30-01-26

Friday, 30-01-26