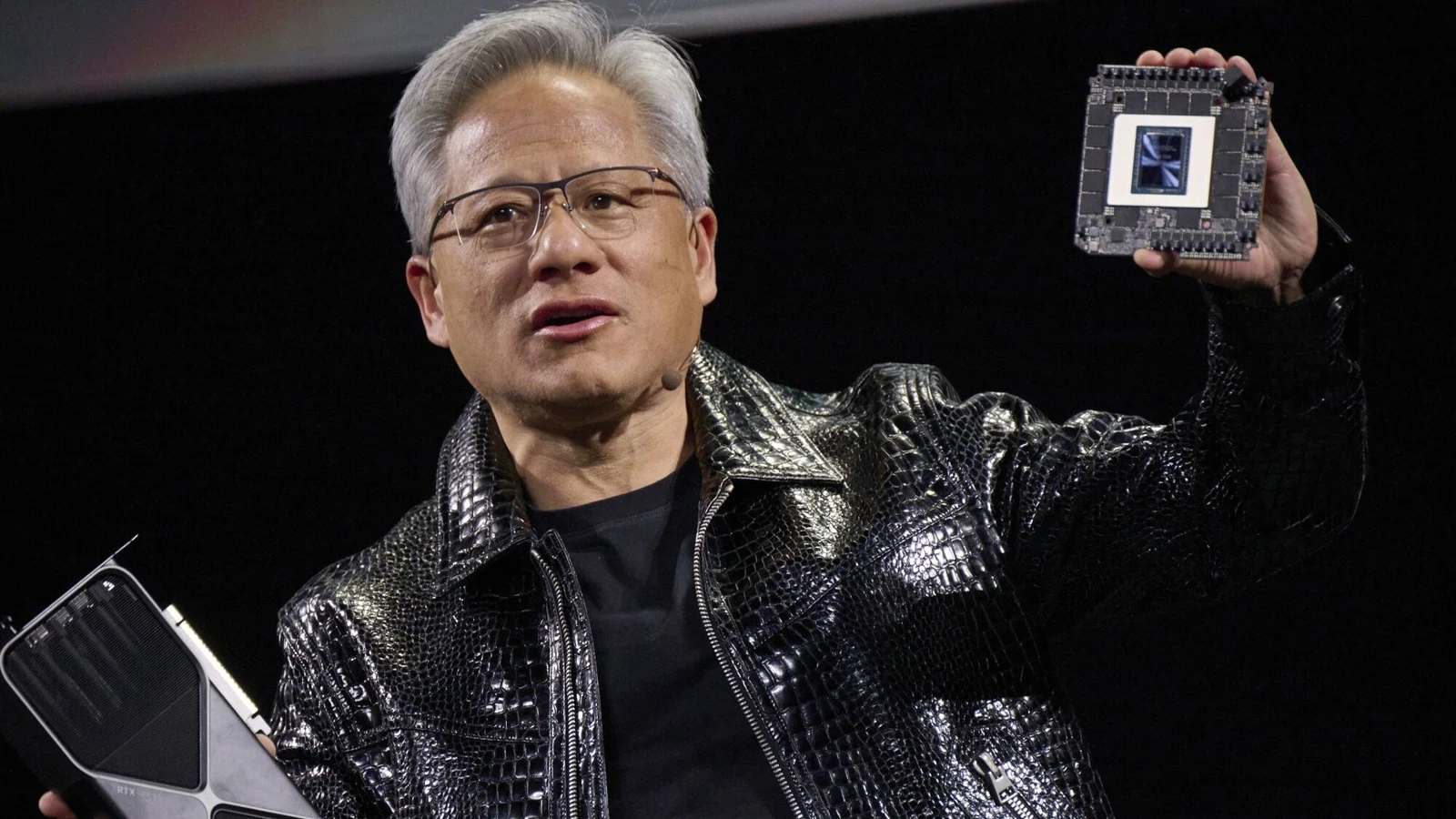

Nvidia’s CEO, Jensen Huang, recently shared that the performance of the company’s AI chips is advancing faster than Moore’s Law, a benchmark in computing for decades. According to Huang, Nvidia’s progress surpasses the typical rate of improvement in chip performance historically driven by Moore’s Law.

Moore’s Law, established by Intel co-founder Gordon Moore in 1965, predicted that the number of transistors on computer chips would double every year, thereby increasing chip performance. However, the pace has slowed in recent years.

Despite this, Huang claims that Nvidia’s AI chips are advancing much quicker. He highlighted the company’s latest data center superchip, which is over 30 times faster at running AI inference workloads compared to the previous generation.

Huang explained that Nvidia’s approach to innovation enables this accelerated progress. By developing the architecture, the chip, the system, the libraries, and algorithms simultaneously, Nvidia can outpace Moore’s Law.

“If you do that, then you can move faster than Moore’s Law because you can innovate across the entire stack,” Huang remarked.

This comes at a time when the AI community is questioning whether progress has plateaued. Leading AI labs, including OpenAI, Google, and Anthropic, rely on Nvidia’s chips for training and running their AI models. This continued development of Nvidia’s chips could result in further advancements in AI capabilities.

Huang also discussed what he calls “hyper Moore’s Law,” a term he introduced previously, referring to the rapid pace at which AI hardware is improving. He noted that, instead of a singular focus on Moore’s Law, three new AI scaling laws are currently at play: pre-training, post-training, and test-time compute. The latter, test-time compute, is crucial for inference—the phase where AI models generate answers.

The Nvidia CEO emphasized that these developments would not only boost performance but also reduce the costs associated with running AI models. He explained that the current improvements in inference computing will eventually make it more affordable, benefiting businesses and consumers alike.

Despite Nvidia’s dominant position in the AI hardware market, there are concerns about the high costs of chips, particularly as tech companies shift their focus to inference. AI models such as OpenAI’s o3 model, which uses extensive test-time computation, have raised concerns over their affordability.

Huang, however, insists that the rapid performance improvements will make these models more accessible. He stated that Nvidia is focused on creating chips that are not only faster but also cheaper in the long run.

SOURCE: TECHCRUNCH | PHOTO: BLOOMBERG

Read More

Friday, 27-02-26

Friday, 27-02-26