Social media platforms are increasingly grappling with the rise of AI-generated content. Adam Mosseri, head of Instagram, recently emphasized the need for more context in posts, particularly when it comes to AI-created images and texts. In a series of Threads posts on December 16, 2024, Mosseri urged users not to blindly trust online content, noting that AI is increasingly capable of creating highly convincing posts that could easily mislead audiences.

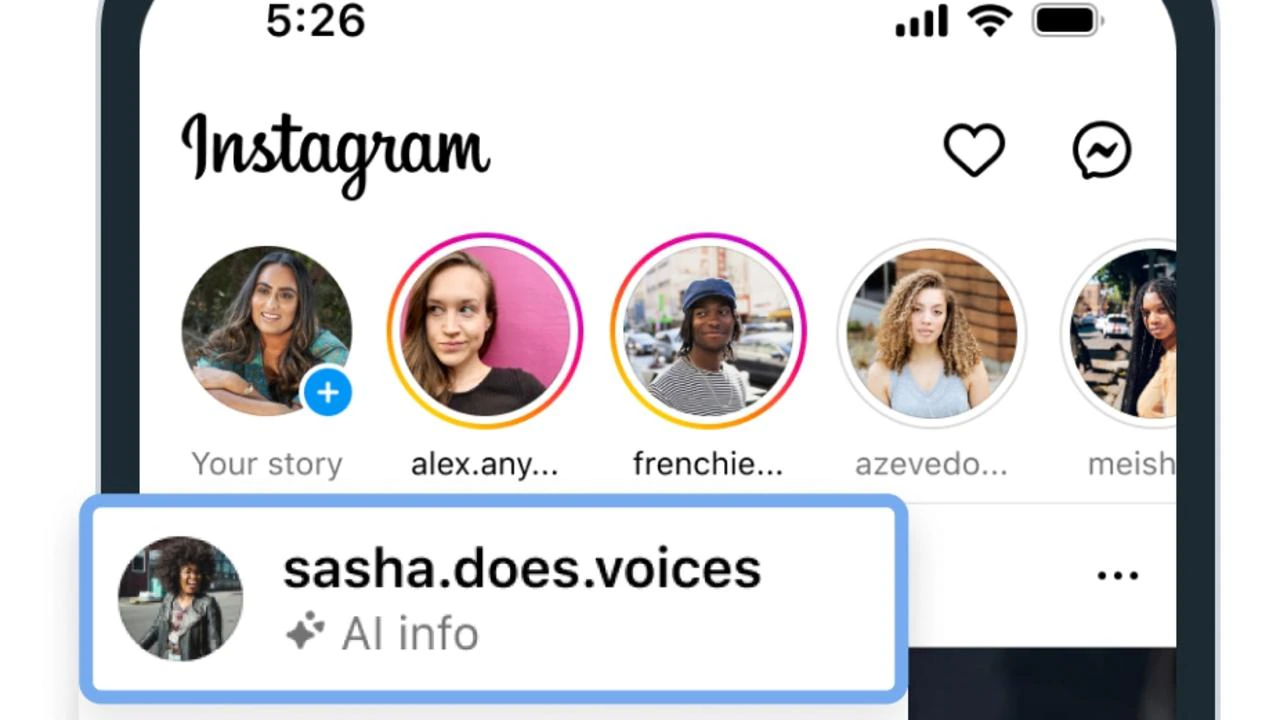

As AI technology advances, it becomes harder to distinguish between real and artificial content. Mosseri acknowledged this challenge, highlighting that while platforms like Instagram should do their best to label AI-generated content, some posts may slip through the cracks. This uncertainty has prompted calls for greater transparency in social media.

“We must label AI-generated content as best we can,” Mosseri wrote. However, he admitted that current efforts might not catch all AI content. He suggested that social platforms also need to provide more context about who is sharing the content. This approach would allow users to evaluate the credibility of posts and make more informed decisions about what to believe.

This shift in perspective aligns with broader concerns about the accuracy and trustworthiness of online information. Just as chatbots can sometimes provide incorrect or misleading answers, AI-generated content is no different—it can be confidently misleading. For users, checking the source of content can help prevent falling victim to misinformation. Mosseri’s comments echo this need for accountability, urging social platforms to work harder to ensure users can easily discern the authenticity of posts.

Currently, Instagram and Meta platforms do not offer enough context to help users distinguish between real and AI-generated content. However, Mosseri’s comments suggest that Meta could soon introduce new rules aimed at improving transparency. While it's not yet clear what specific changes will be made, the possibility of user-driven moderation, similar to tools used by platforms like X (formerly Twitter) or YouTube, is becoming a likely option.

As AI continues to influence social media dynamics, Mosseri's call for more robust content labeling and source transparency highlights the growing need for platforms to adapt to these new challenges. Users, meanwhile, must become more discerning in their online interactions to avoid falling prey to AI-driven deception.

THEVERGE

Read More

Saturday, 28-02-26

Saturday, 28-02-26