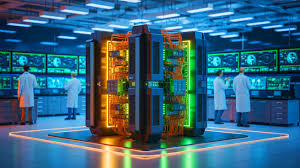

Artificial Intelligence has reached a point where its advancement hinges on one key infrastructure: supercomputers. These machines, especially those optimized for AI, are shaping global competitiveness across industries such as defense, healthcare, climate science, and even geopolitical strategy. In 2025, the race to build the world’s most powerful AI supercomputers has intensified, with governments and tech giants vying for supremacy in computing capability.

AI supercomputers are no longer just scientific curiosities—they are strategic assets. Their ability to process billions of calculations per second empowers groundbreaking work in fields that demand intensive machine learning, massive data crunching, and large language model (LLM) training.

Let’s explore who’s leading the charge in this AI arms race, what these machines are used for, and how this shift is rewriting the rules of global power.

Why AI Supercomputers Matter Now More Than Ever

The growth of artificial intelligence is inherently tied to computational power. While consumer-facing tools like ChatGPT and Copilot grab headlines, the unseen engines behind these innovations are data centers and supercomputers with astonishing capabilities.

For instance, training a large AI model like OpenAI’s GPT series or Meta’s Llama requires months of work across thousands of GPUs running in parallel. Traditional computing infrastructure simply can’t meet these demands. This is where purpose-built AI supercomputers come in.

AI supercomputers differ from traditional high-performance computing (HPC) machines because they are optimized for matrix calculations, deep learning algorithms, and real-time AI inferencing. Instead of focusing purely on floating point operations per second (FLOPS), these machines are rated based on AI-specific metrics like "AI training performance" or "AI inference throughput."

As AI models grow more sophisticated, the countries and companies with the fastest and most efficient AI supercomputers will have a significant edge in everything from economic forecasting to autonomous defense systems.

Leading the Pack: Countries and Companies Behind Top AI Supercomputers

Several powerful AI supercomputers dominate the global leaderboard, including systems in the U.S., China, Japan, and Europe.

1. Frontier (USA)

Housed at the Oak Ridge National Laboratory and powered by AMD, Frontier is currently the fastest publicly ranked supercomputer, capable of over 1.1 exaflops of performance. While designed for scientific research, it has direct applications for AI training, particularly in climate modeling and bioinformatics.

2. Fugaku (Japan)

Developed by RIKEN and Fujitsu, Fugaku is another heavyweight contender. Although initially built for HPC tasks, it’s increasingly used for AI workloads, especially in medical simulations and pandemic response modeling.

3. Lumi (Finland/EU)

One of the most powerful systems in Europe, Lumi combines sustainability with performance by running on 100% renewable energy. It's optimized for AI and data analytics and aims to advance European digital sovereignty.

4. Sunway TaihuLight and Tianhe-3 (China)

Though not fully transparent about their real-time specs, China has made rapid progress in building indigenous AI supercomputers. These systems are critical to national AI development strategies, with a focus on military applications and large-scale surveillance AI.

5. Condor Galaxy (USA/Meta)

Meta has begun investing heavily in its AI infrastructure with the Condor Galaxy series. Built in collaboration with Nvidia and hosted by CoreWeave, this distributed supercomputer is tailored for generative AI and LLM training at massive scale.

The growing presence of hyperscalers like Microsoft Azure, Google Cloud, and Amazon AWS in AI computing underscores a larger shift: cloud-native supercomputing. These systems offer modular AI supercomputing services without the need for physical access, enabling rapid scaling for startups and enterprises alike.

From Defense to Climate: Use Cases of AI Supercomputers

The power of AI supercomputers is being harnessed across a spectrum of industries and public sectors:

1. Defense and Security:

AI models trained on defense datasets are being used to analyze satellite imagery, detect cyber threats, and manage autonomous drone fleets. Countries with leading AI supercomputers are integrating them into strategic operations and predictive war games.

2. Healthcare and Genomics:

High-performance AI systems are revolutionizing drug discovery, protein folding simulations, and pandemic modeling. AI supercomputers cut development cycles for new treatments and vaccines by months or even years.

3. Energy and Environment:

Supercomputers help optimize power grid distribution using AI, predict renewable energy yields, and model the environmental impact of climate policies. Exascale AI computing is essential for accurate climate simulations and disaster forecasting.

4. Finance and Markets:

Hedge funds and central banks use AI models trained on massive economic datasets to detect market patterns, predict crashes, and automate trading at microsecond speeds. AI supercomputers offer unparalleled data processing capabilities for these use cases.

5. AI Model Training:

The most resource-intensive use of supercomputers today is the training of large language models and computer vision systems. Training GPT-4 or similar models may take months using thousands of GPUs, costing tens of millions of dollars.

The Future: Who Will Control the Next AI Supercomputer Frontier?

As the AI revolution accelerates, control over computing infrastructure will become a central issue of national policy. In the United States, the CHIPS and Science Act incentivizes domestic AI supercomputer development to reduce reliance on foreign chipmakers. In China, heavy investment in local silicon and AI chips aims to circumvent U.S. export bans and build sovereign AI systems.

Meanwhile, the private sector is forming alliances to accelerate the development of custom AI chips and supercomputing clusters. Nvidia’s role in this ecosystem remains dominant, but competition from AMD, Intel, and newer players like Cerebras and Graphcore is intensifying.

The transition toward exascale and zettascale computing is already in motion. Researchers are exploring liquid-cooled data centers, quantum-accelerated AI workloads, and AI-designed chips that optimize themselves for specific workloads.

This creates a new geopolitical layer where AI leadership is not just about algorithms or data, but also about who controls the chips, the servers, and the electric grids that power the future.

Read More

Monday, 09-02-26

Monday, 09-02-26